Introduction

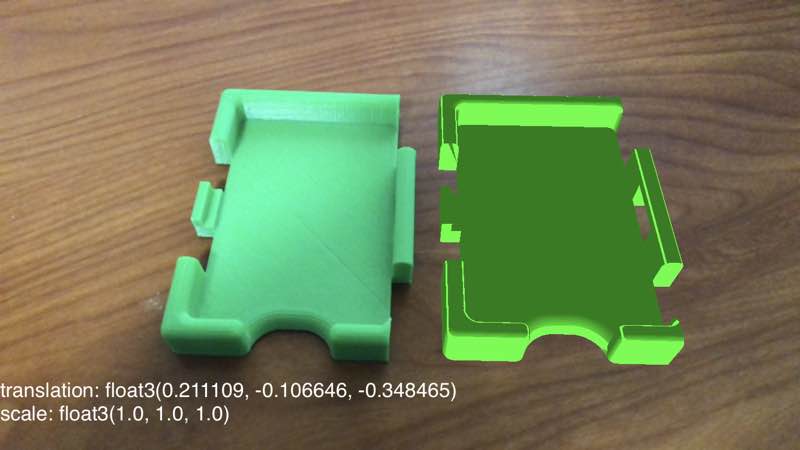

Ever since ARKit was released, I’ve had the idea to build an app that would let people preview models for 3D printing in an AR Scene. It would allow the user to place their objects in the AR environment, and then be able to manipulate and rotate them in a realistic manner. For this post, I’ll be using the interlocking card holders I designed for Settlers of Catan as a demo. (They lock together to form a Monopoly-style bank for all of the resource and development cards.)

There’s a number of things on my to-do list for the app:

- iCloud Drive integration & File type associations for loading files via the share sheet.

- User-configurable scaling & orientation, since some STL files may use different units/coordinate systems.

- Fallback for non-ARKit devices to a simple Metal-based view mode with one model centered onscreen. This should give us the ability to support iPhone 5s, iPad Air iPad mini 2, and newer, even if ARKit is not available. [1]

- Plane detection: display a light transparent grid on planes (user-configurable), and mainly for debugging.

- Plane projection: When a user taps the screen, that tap’s vector should be extended to intersect with a detected plane, if one exists. The object would be placed relative to that projected coordinate to simulate real-world interaction.

- Shaders: Realistic shading for the printer’s filament layers based on the normal vectors for each polygon. Normal vectors for a polygon that is parallel to the vertical axis should be shaded with lines on the

x = zplane, to simulate an individual layer. All other triangles should be shaded based on the y-value and the configured layer height (about 0.3mm). - Model downscaling: Simplify complicated models if individual polygons are so small they will not be noticed, to improve performance.

Getting Started

To start, I created an ARKit & Metal/Swift starter project using XCode. In an earlier proof-of-concept, I was utilizing SceneKit based on one of Apple’s demos, but it didn’t grant me the control over the models and display I was looking for in any obvious way. STLs files contain just vertex information, and no color or textures, so they’d appear white inside of SceneKit. I have some experience using OpenGL so I decided to take this opportunity to explore Apple’s Metal for the task since it’d give me the most control over the scene and rendering pipeline. Most of the colorization and lighting can then be done inside of the shaders independent of the model logic in swift.

Luckily, iOS’ Model I/O library supports all sorts of formats and allowed me to easily load the raw STL file into a Mesh that can be drawn in the metal pipeline. I ran into a few issues:

- Model coordinate systems are different.

- As it turns out, ARKit’s coordinate system is defined in meters, and the STL file output by Autodesk’s Inventor appears to have its units set to centimeters. Applying a scale transform to the transformation matrix for the model’s anchor fixed this.

- Model rotation is inconsistent.

- For the example model, it needed to be rotated 90° across the X-axis

- This is mainly due to the axes of the original CAD model being incorrectly aligned with their real-world counterparts. From what I remember, this is a problem with many STL models that exist depending on what software created them.

- As a result, this will need to be a user-configurable setting per-model.

- Apple’s demo has the Z-axis inverted, causing our models to be flipped vs. their real-world counterparts!

- However, disabling this transform causes lighting to be calculated incorrectly with the default shaders and uniform settings.

- Since we’ll be re-doing the Metal shaders later, it shouldn’t be too much of an issue.

- From the starter template:

// Flip Z axis to convert geometry from right handed to left handed

// We will need to remove this.

var coordinateSpaceTransform = matrix_identity_float4x4

coordinateSpaceTransform.columns.2.z = -1.0Current Progress